Zeitpunkt Nutzer Delta Tröts TNR Titel Version maxTL Mi 24.07.2024 00:01:07 61.956 0 3.574.077 57,7 Fosstodon 4.2.10 500 Di 23.07.2024 00:00:03 61.956 -3 3.570.705 57,6 Fosstodon 4.2.10 500 Mo 22.07.2024 00:01:10 61.959 +1 3.567.825 57,6 Fosstodon 4.2.10 500 So 21.07.2024 00:01:07 61.958 +1 3.564.861 57,5 Fosstodon 4.2.10 500 Sa 20.07.2024 00:01:10 61.957 +1 3.561.604 57,5 Fosstodon 4.2.10 500 Fr 19.07.2024 13:57:34 61.956 -1 3.558.474 57,4 Fosstodon 4.2.10 500 Do 18.07.2024 00:00:27 61.957 +1 3.553.476 57,4 Fosstodon 4.2.10 500 Mi 17.07.2024 00:01:10 61.956 -1 3.550.157 57,3 Fosstodon 4.2.10 500 Di 16.07.2024 00:00:36 61.957 +6 3.547.999 57,3 Fosstodon 4.2.10 500 Mo 15.07.2024 00:00:01 61.951 0 3.544.794 57,2 Fosstodon 4.2.10 500

Daniel Demmel (@daaain) · 04/2022 · Tröts: 388 · Folger: 142

Mi 24.07.2024 00:33

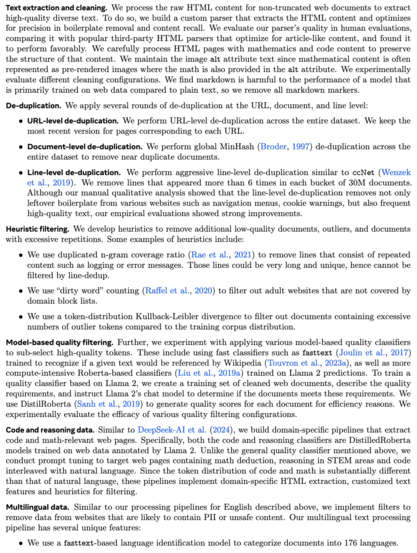

Sounds like we're reaching the limits of LLM training data from the web – screenshot from the Llama 3.1 paper: https://ai.meta.com/research/publications/the-llama-3-herd-of-models/

Text extraction and cleaning. We process the raw HTML content for non-truncated web documents to extract high-quality diverse text. To do so, we build a custom parser that extracts the HTML content and optimizes for precision in boilerplate removal and content recall. We evaluate our parser’s quality in human evaluations, comparing it with popular third-party HTML parsers that optimize for article-like content, and found it to perform favorably. We carefully process HTML pages with mathematics and code content to preserve the structure of that content. We maintain the image alt attribute text since mathematical content is often represented as pre-rendered images where the math is also provided in the alt attribute. We experimentally evaluate different cleaning configurations. We find markdown is harmful to the performance of a model that is primarily trained on web data compared to plain text, so we remove all markdown markers. De-duplication. We apply several rounds of de-duplication at the URL, document, and line level: o URL-level de-duplication. We perform URL-level de-duplication across the entire dataset. We keep the most recent version for pages corresponding to each URL. o Document-level de-duplication. We perform global MinHash (Broder, 1997) de-duplication across the entire dataset to remove near duplicate documents. ....

[Öffentlich] Antw.: 0 Wtrl.: 0 Fav.: 0